4) Universal Business Adapters

Universal Business Adapters are used for sources with no pre-packaged business adapter, i.e. primarily for non standard sources such as excel, csv,.dat and in some cases legacy systems (mainframes, Cobol etc), these adaptors when used adeptly can even load data for unstructured sources (web catalogs, web logs, application server logs etc) and integrate them into the mainstream analytic warehouse. This is actually how we can define universal adaptors in the best possible way but does this definition explain it all? I hope not let’s try a real time example

You are an ever expanding organization, your sources for the OBIA analytics warehouse were well defined and standardized for example you had 3 standard source systems Ebiz, JD Edwards and PeopleSoft supplying all your products details. Now just based on your long term strategy you occupied another organization "xyz corporation", this organization is a startup company which still relies on excel to load product details, your concern now is how do you integrate the product details from xyz organization into the mainstream analytic warehouse. Universal adaptors can resolve this problem for you; the following process explains how you can leverage universal adaptors to resolve this scenario in BIApps

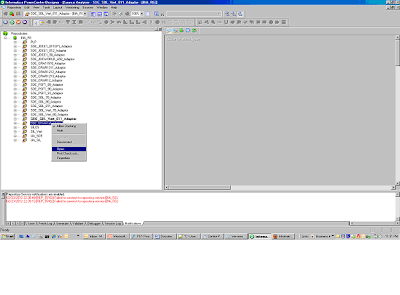

1) Open the folder SDE_Universal_Adaptor by doing a right click and selecting open

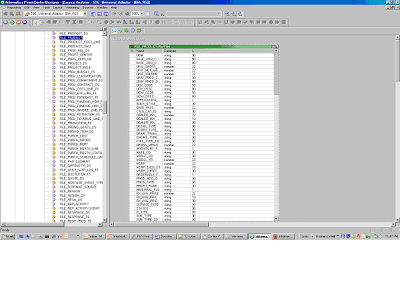

2) Open the mapping SDE_Universal_ProductDimension

3) Map the file to the equivalent Source definition, there are two ways to do this either:

3.1) you can customize the output file from Xyz Corporation to be similar to the source definition in this mapping.

3.2) or you can change the source definition itself (change the source definition to match the output file from Xyz Corporation) but this will also require changing this mapping flow too.

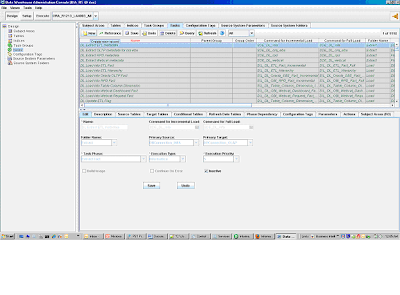

4) Define a task for this mapping in the DAC execution plan:

5) There is no pre-defined task defined for custom jobs inside DAC, so this custom Universal Adaptor Mapping needs to be defined as a task and included into the DAC process flow. Note of caution here

· DAC determines order of execution of mappings based on some predefined algorithms so one needs to be careful on the ordering of this custom task.

· Mappings get associated to DAC tasks through names and folders also DAC needs some predefined parameters to execute an Informatica mapping

· Trying this mapping as a micro etl might distort the consistency of the Datawarehouse.

No comments:

Post a Comment